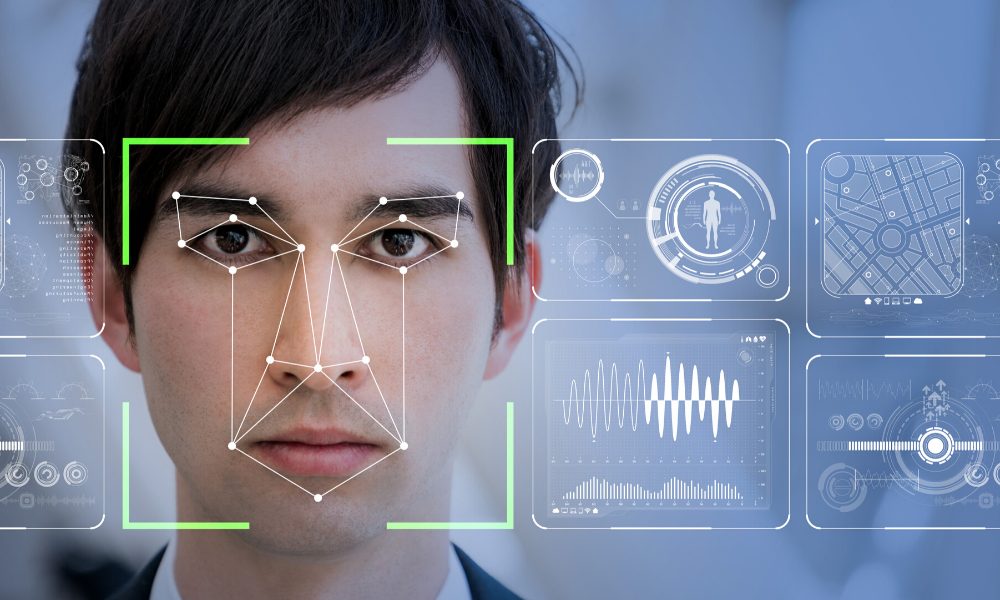

Facial Recognition Technology Sent An Innocent Man To Jail – Responses Are Making The Problem Worse, Not Better

Last month, after facial recognition software identified Robert Williams as the shoplifter of several watches from a store in Detroit, police officers arrested him.

But, Williams was innocent.

The software had made a mistake with severe consequences.

While law enforcement does employ procedures to protect innocent people from technology-induced injustices – the arresting officers violated department procedures by trusting a facial recognition tool without obtaining corroborating evidence – the fact that police were able to make an arrest and hold a suspect based on nothing more than the output of a faulty computer algorithm shows clearly that the danger of law enforcement agents misusing facial recognition systems is no longer the domain of fictional literature, television, and movies.

While various parties are taking action in an attempt to prevent similar abuses from recurring in the future, many of the approaches being pursued are flawed, and, despite the good underlying intentions of those involved, may actually aggravate matters.

For starters, Amazon, Microsoft, and IBM – along with several other software companies that own and market powerful facial recognition tools – have terminated or paused the sale of such technology to domestic police departments. Such a precaution may sound wise – but, its true efficacy is highly questionable, and it may even prove counterproductive.

To begin with, many police departments use products from vendors other than those who are now pausing sales, and those law enforcement agencies that do lose their ability to license their current products of choice can easily obtain technology from any one of many non-boycotters. The software misused by Detroit officers, for example, was sold to the department by a police contractor, and was apparently powered by algorithms from two vendors, one based in Japan and one based in the USA; none of the three parties involved has indicated that it will terminate making sales to US domestic law enforcement agencies.

In fact, while huge software firms such as Amazon, Microsoft, and IBM, that derive only tiny portions of their revenues from the sale of facial recognition technology can afford to pause sales to law enforcement, it is unreasonable to expect that smaller vendors who focus large or all of their resources on this area will follow suit; the latter will simply not cut off one of their biggest sources of revenue, and risk destroying their entire businesses.

The bottom line is that no business or group of businesses is going to stop the police from obtaining facial recognition systems; furthermore, because facial recognition algorithms are not regulated with standards, or subject to a mandatory audit process or quality checks, if firms with superior offerings refuse to sell to law enforcement, police are certainly going to utilize inferior systems that will undoubtedly make more frequent errors, thereby increasing both the number of criminals escaping justice and of innocent people being falsely accused and arrested. (Companies can submit their facial recognition technologies to the U.S. Department of Commerce’s National Institute of Standards and Technology (NIST) for its Face Recognition Vendor Test (FRVT), but, doing so it totally voluntary, and has no bearing on a company’s ability to sell its technology in the USA.)

The lack of control over providers and their offerings is exacerbated by the nature of the technology market; technology businesses frequently invent new technologies, improve upon older technologies, license technologies to one another, merge, rebrand, and/or acquire other firms’ assets – often doing so through agreements protected by strict Non Disclosure Agreements – all of which make it effectively impossible to keep track of the entire list of parties with access to any particular type of technology. Couple that with the fact that many deals related to cutting-edge technologies such as facial recognition involve at least one party headquartered overseas, and it becomes clear that the Pandora’s box is already open when it comes to the spread and potential abuse of facial recognition technologies; not even the combined prowess of Amazon, Microsoft, and IBM can stop the spread of dangerous possibilities.

Furthermore, it is clear that many governments around the world already have facial recognition technology that is at least as powerful as the offerings of major commercial software providers (some governments may have even stolen their technology from one or more such businesses) – and there is little doubt that they use it extensively. I am quite certain, for example, that, if it so wishes, the Chinese government could recognize me instantly in any location in which it can install or access cameras. Software vendors refusing to license facial recognition technology to police departments will not do anything to prevent abuse by foreign countries, intelligence agencies, and law enforcement groups that already have their own systems, and may encourage others to develop (or steal) technologies of their own. It is also likely that various criminal enterprises have and use such technologies – and it is hardly in the public interest that criminals be armed with sophisticated technologies that law enforcement lacks.

While no action by major software companies is going to do much to prevent the abuse of facial recognition technologies, strictly enforced laws that govern its use certainly can. While laws will not prevent abuse by foreign governments and criminals, it will provide for safe use by domestic law enforcement, and the respecting of individual rights. Relevant laws must not be crafted haphazardly; we need to convene groups of experts on the relevant technologies, privacy, security, legal, and ethical issues, and have them provide ideas, input, and guidance to those who will ultimately need to enact the laws. The arrest of Robert Williams last month should serve as a loud wake-up call; we need to get moving on such an effort.

In the meantime, American companies that are presently refusing to sell to law enforcement might want to reconsider their positions. Are Americans really better off with police departments purchasing software from foreign firms not subject to American laws and regulations, than we would be if the software were provided by domestic technology companies? Do we really want facial recognition technology to be used in the USA if its ultimate providers are beholden to foreign governments, and, can much more easily than their domestic counterparts, escape any potential prosecution for misdeeds?

CyberSecurity for Dummies is now available at special discounted pricing on Amazon.

Give the gift of cybersecurity to a loved one.

CyberSecurity for Dummies is now available at special discounted pricing on Amazon.

Give the gift of cybersecurity to a loved one.